The Non-Invasive Vital Signs Monitoring Project

Engineering Meets Healthcare Innovation

ABSTRACT

In recent years, the concept of contactless and remote monitoring of patients has gained momentum due to its multiple advantages over traditional contact monitoring involving leads and wires. The Non-Invasive Vital Signs Monitoring Project (NIVS) aims to extract heart rate data from both healthy volunteers as well as patients in real[1]world hospital scenarios using normal red-green-blue (RGB) and thermal imaging cameras. NIVS aims to overcome the limitations which exist with current contact systems. This article describes the underlying scientific principles, the execution and the potential benefits of such a system in the local healthcare setting.

KEYWORDS

Contactless Monitoring, RGB camera, Vital Signs, Thermal Imaging Camera, Clinical Monitoring

AIM AND INTRODUCTION

NIVS is a collaborative project between the University of Malta’s Centre for Biomedical Cybernetics, the Faculty of Medicine and Surgery and Mater Dei Hospital. The project is funded by the Malta Council for Science &Technology (MCST), for and on behalf of the Foundation for Science and Technology, through the Fusion: R&I Technology Development Programme. Ethical approval was obtained from the Faculty Research Ethics Committee of Medicine and Surgery.

The aim of the project is to design a system capable of measuring heart rate in real-time in clinical settings, without modifications to patient care. Red-green-blue (RGB) cameras and thermal imaging cameras are used for this purpose.

The underlying principle behind the extraction of cardiovascular data from RGB videos is remote photoplethysmography (rPPG). This principle is based on the absorption of specific wavelengths of visible light by haemoglobin in the blood perfusing the skin.1 This principle is the same as that used in pulse oximetry, but visible ambient light is used instead of specific wavelengths.2 Algorithms can be used to detect the light reflected in a pulsatile manner from the skin of various body parts, most commonly the face, neck and hands. These body parts are used as a region of interest (ROI) for rPPG. From this, the rate and rhythm of the heart beat can be deduced.3–5

Many algorithms exist to extract heart rate; for this project, a type of algorithm called a convolutional neural network (CNN) is used. This is an algorithm constructed to recognise certain aspects and objects in a video and can be trained to apply this knowledge to new videos.6-8 In the case of the NIVS project, a set of videos were set up where the subject’s heart rates are fed to the CNN so that it can learn to extract heart rate data from the RGB videos.

CNN models have proved to be reasonably accurate for medical purposes in estimating heart rates from videos of patients’ faces; apart from the legal age of consent, no exclusion criteria were applied for the purposes of this project. Nonetheless, a number of limiting factors exist with current models. Their performance is often negatively impacted by changes in ambient light and motion of the subject and other persons in the patient’s immediate surroundings. Videos processed via CNNs have so far been quite short, and few studies have obtained real-time measurements.9-11 Our study aims to overcome some of these limitations and test out a CNN based system in a real-world hospital setting.

METHODOLOGY

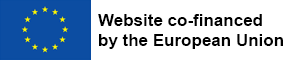

The project was designed in two stages. For the first stage, twenty-seven healthy volunteers with no documented cardiovascular medical pathologies were recruited and their consent was obtained. Data collection involved a setup where the person is lying on a couch and cameras (a digital camera, Canon Legria HF G25, and a smartphone camera, (Xiaomi Redmi 9A) are positioned at a two-metre distance from the subject’s face. Ground truth heart rate was collected using electrocardiogram electrodes and pulse oximetry which link the participant to the data acquisition equipment (BIOPAC MP150). Video clips were recorded with varying conditions, such as bright light, minimal light, and with the cameras which were then moved to four metres away from the subject’s face. Another video clip also captured random movements of the head, arms and legs of subjects in order to assess the effect of the movements to the accuracy of results. These videos were used for training the CNN to recognise heart rates from videos while comparing them to the ground truth data available.

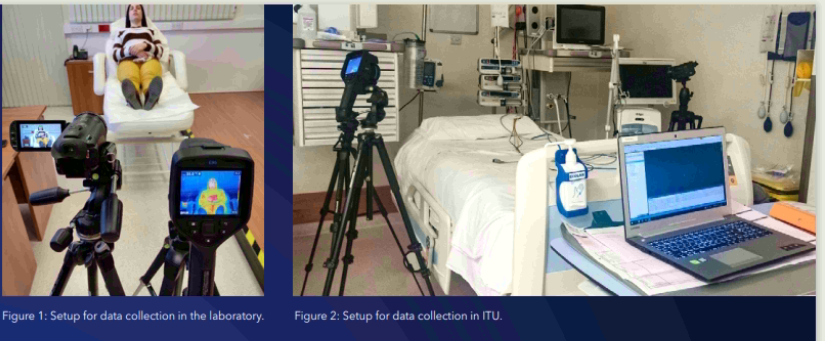

The second part of the study took place in the Intensive Therapy Unit (ITU) at Mater Dei Hospital. This stage involved taking videos of thirty-five consenting patients with no specific exclusion criteria, other than that all participants must be above eighteen years of age. The aim was to collect real-world data with normal ongoing patient care, while patients move freely, with other individuals visible around them. The cameras were set up in a practical manner around the patient’s bed and ten-minute video clips were taken of patients at rest, during physiotherapy sessions if applicable, during small procedures such as venous catheterisation or bloodletting, as well as during night-time when overhead lighting was dimmed or switched off. Ground truth data in this case was obtained using a software programme (VS Capture) to obtain real-time readings from the Philips Intellivue monitors used in the ITU, and converted to a Microsoft Excel file.

The videos obtained from ITU were used for testing purposes to assess the accuracy of the developed CNN compared to standard Phillips Intellivue monitors when video frames from an ITU environment are fed into it to extract heart rate data.

RESULTS

Models Description

The deep learning models that were being used in this study for the estimation of the heart rate were two, i.e. the spatio-temporal CNNs C3D and the 3D-DenseNet.12-14 This deep network model was originally employed for video classification task and, as reported in Du Tran et al, it has powerful spatio-temporal feature extraction capability.12 This is due to its design and architecture which allows it to model both appearance and motion features in the video.

Datasets

As mentioned in previous sections, data were collected from volunteers in a laboratory environment simulating the various conditions which would later be encountered in hospital. We collected videos which were then split into 30-second clips. The extracted frames from the video clips were resized to images of size 64×64 pixels. Although the frame size may seem too small, the deep net models displayed good estimation results after they have been trained. For this current stage, we used over 500 short clips, which include different lighting and motion conditions. This, therefore, can help our models to be robust against different quality of videos.

Training

70% of the processed data was then used to train the deep net models from scratch, using different parameters such as different learning rates, batch sizes, and number of epochs. Finally, the model that achieved the best results was selected to be tested on new videos in order to estimate the corresponding heart rate.

Models’ Evaluation

Once the model training converged, they were tested on the remaining 30% of the videos. As data from more subjects are acquired, the deep net models could be trained with more varied data which is known to improve the generalization capability of the networks in estimating the heart rate. The models were evaluated by computing metrics such as the Mean Absolute Error (MAE). Experimentally, it was found that the C3D model was capable of reaching a MAE of 4.87. On the other hand, the 3D-DenseNet performed slightly better with a MAE of 3.7. This means that the values obtained by these models differed from those obtained by gold standard methods by 4.87 beats per minute and 3.7 beats per minute respectively.

In view of the relatively small sample number of subjects used for training the models, these results may be considered satisfactory in terms of accuracy for general medical monitoring. It is expected that with more data and more varied scenarios, model performance will improve.

A number of challenges have been encountered during the second stage of the project at the ITU, which are important learning points to consider if this system is to be developed for more widespread use. Placement of cameras to obtain a good view of the patient’s face without causing obstruction for members of staff can be an issue. In the ITU, patients may have part or all of the face covered by tube ties, masks and sterile sheets during certain procedures such as tracheostomy insertion and central venous catheterisation. Therefore, having a video frame that also includes other portions of skin such as the hands and neck is helpful in these situations, but this is not always feasible due to the patient position relative to the cameras, as well as bandages or clinical equipment that may be obscuring the view of these body parts. Members of staff and relatives moving around the patient can easily obscure the camera’s field of vision and accidentally move the cameras, therefore the cameras would be better placed overhead.

CONCLUSION

This project has the potential to improve patient care and comfort. Patients are more able to move freely if less electrodes and wires are attached to them. This could also prove beneficial to the reduction of post-operative complications such as pneumonia and deep vein thrombosis since these conditions are worsened by immobility which will be less of an issue with contactless monitoring, as well as enhancing rehabilitation of patients who can feel more independent and be able to cooperate better during physiotherapy sessions.15-18 In some categories of patients such as those with extensive burns and other skin pathologies, a non-contact monitoring system can solve the issue of difficult electrode placement due to poor skin quality.19

Another major advantage of a non-contact system is infection control, especially that of multidrug resistant strains (MDROs) which are unfortunately all too common inside Mater Dei hospital. This could stem from inadequately disinfected leads and through staff members’ contact with patients, attempting to troubleshoot monitoring leads. WHO describes the issue of MDRO spread as one of the main health crises of our times.20

In the case of patients who require isolation, such as those with infectious diseases, including COVID-19, and those who are immunosuppressed and require protection themselves, a contactless system can reduce the number of times staff members need to enter the room, which can also contribute to less spread of infection and better health economics in terms of personal protective equipment wastage. This scenario has become a reality in the past two years with multiple waves of the COVID-19 pandemic.21-23

This project is therefore very relevant to the current local situation and may contribute to scientific innovation even within the international community. Although many obstacles need to be overcome in terms of the technology available and the data it is able to process, so far we have obtained promising results and we hope to strive for a practical operational system which can concretely improve upon current patient care.

COMPETING INTERESTS

There are no competing interests in relation to this article.

FUNDING STATEMENT

This project is being funded by the Malta Council for Science and Technology as part of the Fusion R&I Development Programme, bearing code R&I 2018-004-T. Funds from the European Union are being used for this project.

REFERENCES

- Allen J. Photoplethysmography and its application in clinical physiological measurement. Physiol Meas 2007;28(3):R1-39.

- Tamura T, Maeda Y, Sekine M, et al. Wearable Photoplethysmographic Sensors—Past and Present. Electronics 2014; 3(2):282-302.

- Sinhal R, Singh K, Raghuwanshi MM. An Overview of Remote Photoplethysmography Methods for Vital Sign Monitoring. Adv Intell Syst Comput 2020;992:21–31.

- Peng RC, Yan WR, Zhang NL, et al. Investigation of Five Algorithms for Selection of the Optimal Region of Interest in Smartphone Photoplethysmography. J Sensors 2016;2016. Article ID 6830152.

- Calvo-Gallego E, De Haan G. Automatic ROI for Remote Photoplethysmography using PPG and Color Features. Proceedings of the 10th International Conference on Computer Vision Theory and Applications 2015; 2:357-364.

- Albawi S, Mohammed TA, Al-Zawi S. Understanding of a convolutional neural network. Proceedings of the 2017 International Conference of Engineering Technolology. 2018.

- Li H, Lin Z, Shen X, Brandt J, et al. A Convolutional Neural Network Cascade for Face Detection. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2015:5325-5334.

- Acharya UR, Oh SL, Hagiwara Y, et al. A deep convolutional neural network model to classify heartbeats. Comput Biol Med 2017;89:389–96.

- Haan G de, Zhan Q, Wang W, et al. Analysis of CNN-based remote[1]PPG to understand limitations and sensitivities. Biomed Opt Express; 2020; 11(3): 1268-1283.

- Naeini EK, Azimi I, Rahmani AM, et al. A Real-time PPG Quality Assessment Approach for Healthcare Internet-of-Things. Procedia Comput Sci 2019;151:551–8.

- Chaichulee S, Villarroel M, Jorge J, et al. Multi-task Convolutional Neural Network for Patient Detection and Skin Segmentation in Continuous Non-contact Vital Sign Monitoring. 12th IEEE International Conference on Automatic Face and Gesture Recognition 2017:266-272.

- Tran D, Bourdev L, Fergus R et al. Learning Spatiotemporal Features with 3D Convolutional Networks. Proceedings of the IEEE international conference on computer vision 2015:4489-4497.

- Uemura T, Näppi JJ, Hironaka T, et al. Comparative performance of 3D-DenseNet, 3D-ResNet, and 3D-VGG models in polyp detection for CT colonography. Proceedings of the SPIE. Medical Imaging 2020: Computer-Aided Diagnosis. 2020;11314; 1131435.

- Huang G, Liu Z, van der Maaten L, et al. Densely Connected Convolutional Networks. Proceedings of the IEEE conference on computer vision and pattern recognition. 2017:4700-4708.

- Malasinghe LP, Ramzan N, Dahal K. Remote patient monitoring: a comprehensive study. J Ambient Intell Humaniz Comput 2019;10:57-76.

- Bartula M, Tigges T, Muehlsteff J. Camera-based system for contactless monitoring of respiration. Annu Int Conf IEEE Eng Med Biol Soc 2013;2013:2672-5.

- Halpern LW. Early Ambulation Is Crucial for Improving Patient Health. Am J Nurs 2017;117(6):15.

- Epstein NE. A review article on the benefits of early mobilization following spinal surgery and other medical/surgical procedures. Surg Neurol Int 2014;5(Suppl 3):S66-73.

- Sofos SS, Tehrani H, Shokrollahi K, et al. Surgical staple as a transcutaneous transducer for ECG electrodes in burnt skin: safe surgical monitoring in major burns. Burns 2013;39(4):818-9.

- WHO. Evidence of hand hygiene to reduce transmission and infections by multi- drug resistant organisms in health-care settings. 2014. Available from: https://www.who.int/gpsc/5may/MDRO_ literature-review.pdf

- Suresh Kumar S, Dashtipour K, Abbasi QH, et al. A Review on Wearable and Contactless Sensing for COVID-19 With Policy Challenges. Front Comms Net 2021; 2:636293.

- Bahache M, Lemayian JP, Hamamreh J, et al. An Inclusive Survey of Contactless Wireless Sensing: A Technology Used for Remotely Monitoring Vital Signs Has the Potential to Combating COVID-19. RS Open Journal on Innovative Communication Technologies 2020;1(2).

- Taiwo O, Ezugwu AE. Smart healthcare support for remote patient monitoring during covid-19 quarantine. Inform Med Unlocked 2020;20:100428